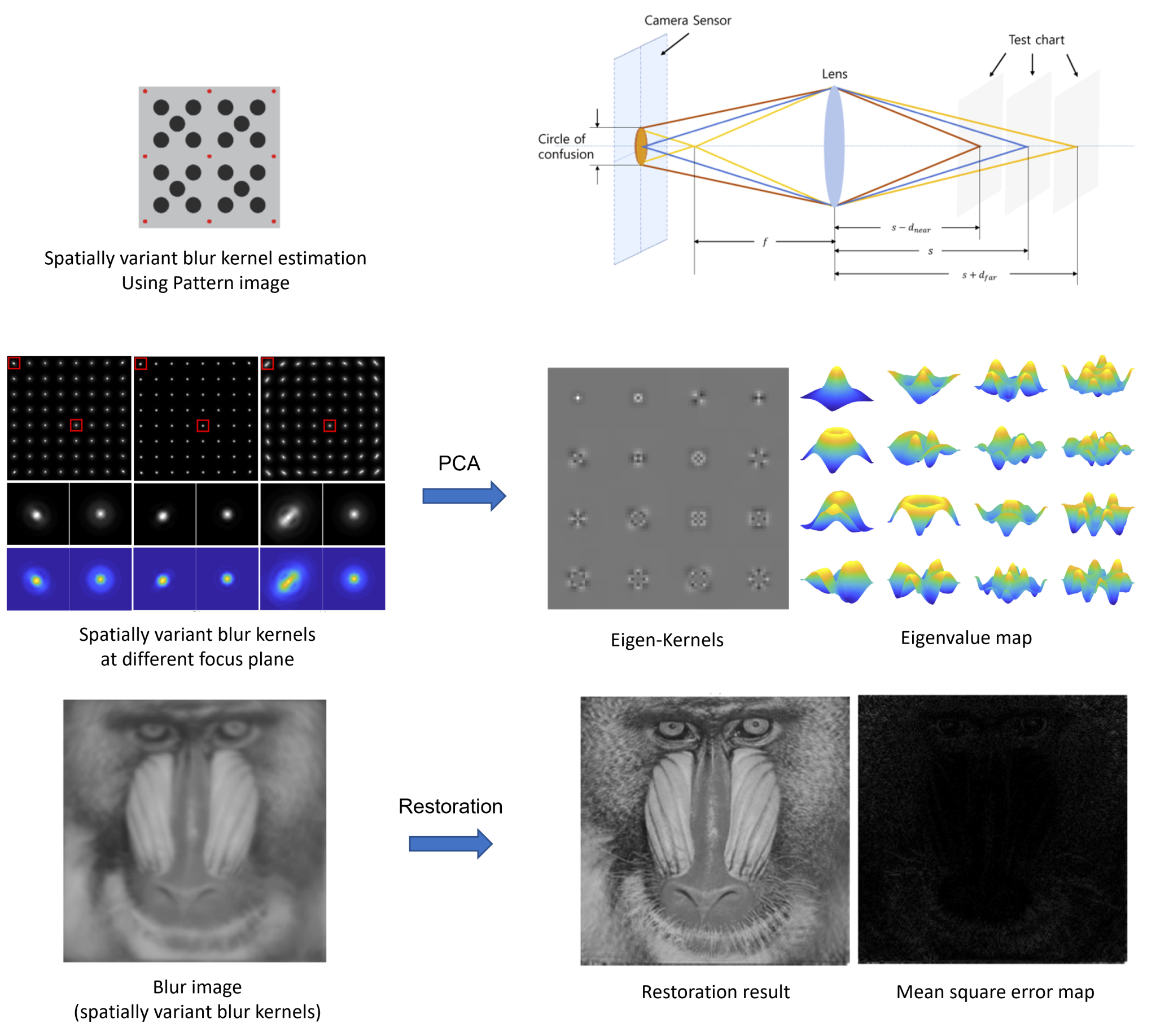

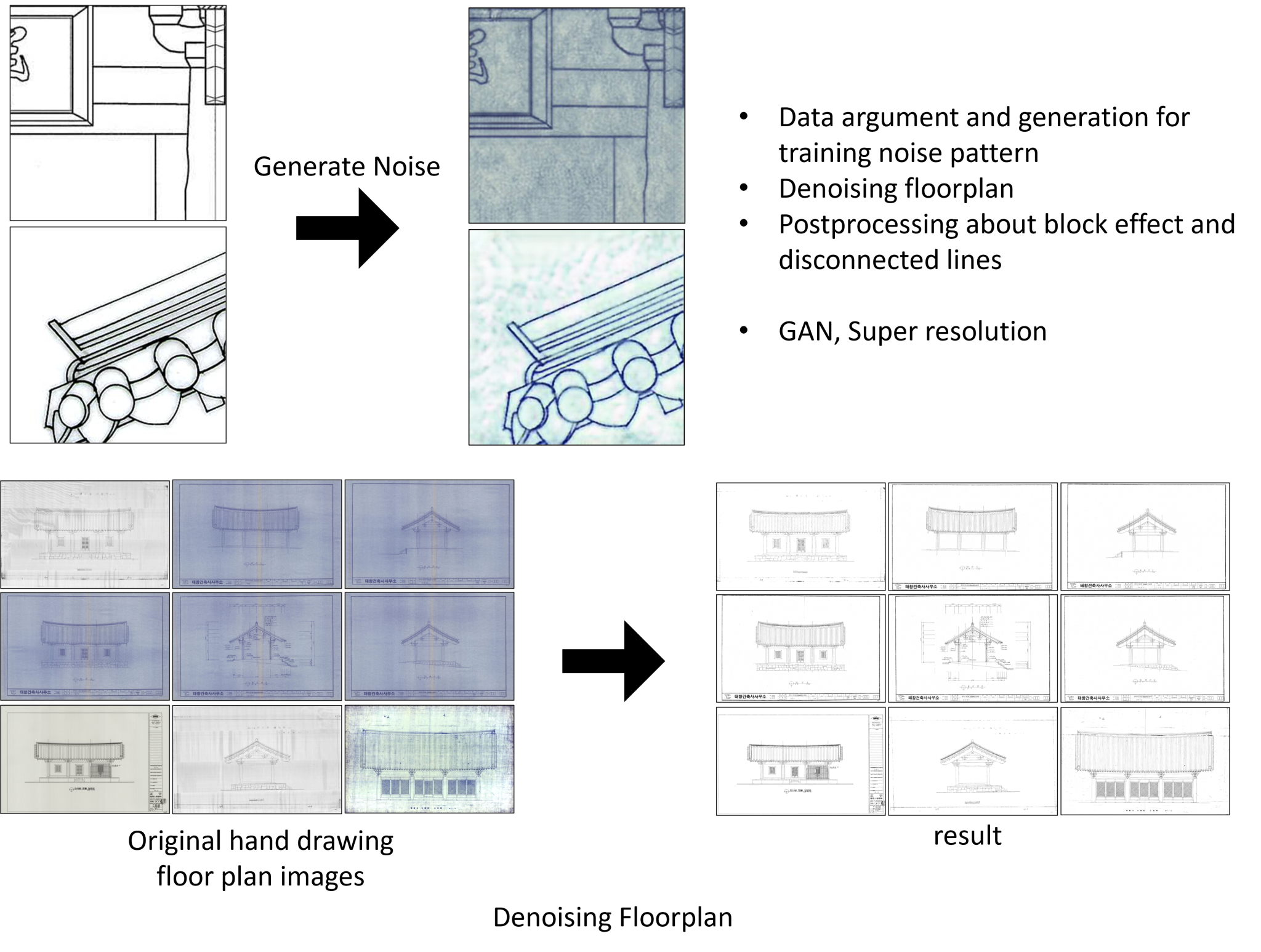

Modeling and restoration of image degradation

When we take an image with a camera, a microscope, or a telescope, we want to acquire a faithful representation of a scene, specimen, or star. But every image suffers from some form of degradation. A lens collects and focuses light coming from all directions on a small sensor. The light smears out even with an expensive lens focused on a still object, even more so when the light travels through nonuniform media and when focused on an object is in motion. We may reduce degradation by employing complicated and expensive equipment with the lens consisting of multiple groups and elements, mechanical stabilization, etc. We can restore a faithful original representation from an observed image via signal processing if we know the degradation introduced during the acquisition process.

The image acquisition process can be modeled as

g = H f + n,

where g, f , and n are vectors that represent observed, original, and noise images, respectively. H is a matrix representing the degradation of the acquisition process. The image restoration is an inverse problem of finding f from g. When the degradation does not change on a sensor, the degradation is modeled with a circulant matrix that can be diagonalized by a discrete Fourier transform (DFT). Once we write the process with a diagonal matrix, the analysis for restoration is straightforward, and the implementation of restoration is efficient. Most of the successful restoration methods rely on the diagonalization of H by the DFT. However, the degradation in real life often changes with the locations on a sensor. Our research focuses on the modeling and restoration of nonstationary degradation.

Related Projects: AI-based traditional architectural hand drawing to CAD conversion Nonstationary and asymmetric lens blur restoration 8K 120Hz AR/VR display SoC HDR color image processing Embedded image processing for low-power LED public displays Smart pico projector parts and engine for 3D HD images Layerwise super resolution Generalized minimal residual based parallel image restoration Contrast enhancement for UHD displays

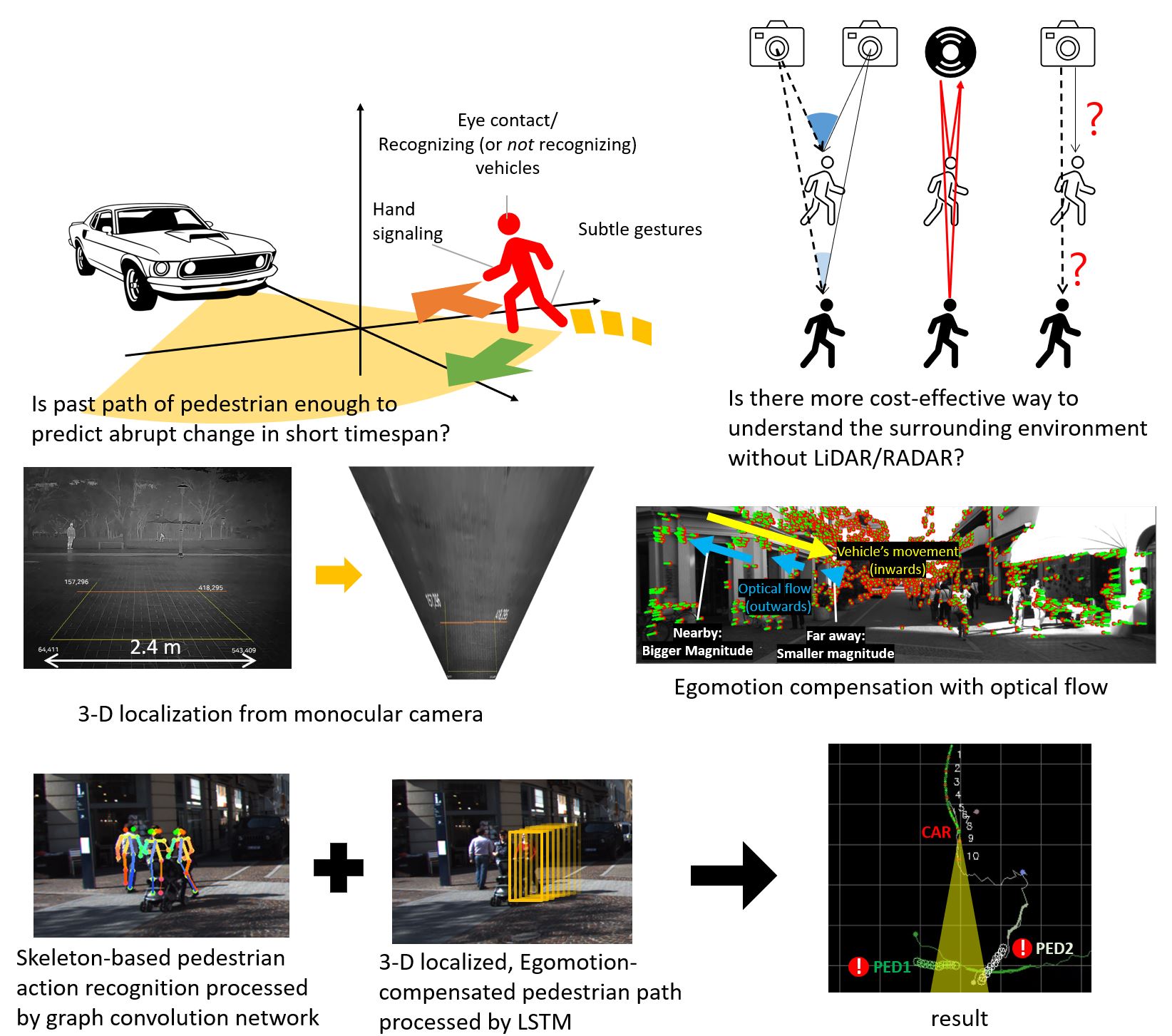

Industrial and vehicular safety

UNIST is located in Ulsan, the industrial capital of Korea. Many heavy industries such as automotive companies, shipbuilders, steelmakers, and many small-and-medium-sized manufacturing companies are in the vicinities of Ulsan. I had the privilege of working with industry-leading companies. And also, through the UNIST Institute of the 4th Industrial Revolution, I have had opportunities to work with local manufacturing businesses. Our research focuses on ensuring workplace safety and innovating small companies in the Korean rust belt

Related Project: Self-elevating crane system for installation and maintenance of onshore wind power systems AI-based simultaneous product classification and quality evaluation Abnormal behavior detection in loading dock Inteligent image masking Pedestrian behavior recognition Back seat passenger detection Personal protective equipment detection and safety distance monitoring Deep network optimization for pedestrian detection Infra-red camera based vehicular part quality evaluation Deep belief network for face recognition Vision-based command recognition for cleaning robot

Data Representations with Deep Neural Networks

Deep neural networks have become an essential tool for image processing. Conventional approaches to signal processing usually start with a domain expert analyzing data characteristics to find a good representation of the data. Then intended processing such as compression, detection, of classification is carried out based on the data representation. Now deep networks are trained to perform the entire processing. One of the problems with deep networks is that the representation a network learns is not ordered with importance. All of the resources of a deep network share its workload. Our research focuses on learning an ordered low-dimensional representation with deep neural networks.

Related Project: UniBrain – Ultimate neuromorphic intelligent brain system engineering

Light field media coding and its applications

Light-field is defined as light flow in a point in space. Researchers are working on the acquisition, compression, transmission, and display of light-field media, with many interesting AR, VR, and immersive video applications in mind. ETRI is leading a big research project with eleven research groups. I am participating in the project, working on efficiently representing geometrical information of objects in space. The basic tool is the truncated signed distance function (TSDF), which changes the sign at the surface point. We are working on efficient representation based on TSDF with test video streams acquired with 21 × 21 arrays of 4K cameras. It is an exciting opportunity for possible contributions to the next-generation video compression standard. We are very interested in the TSDF based surface representation because we can use this representation for 3D object analysis and recognition.

Related Project: Audio/video coding and light field media fundamental technologies for ultra realistic tera-media Object detection and distortion correction for 360 view camera system Computational photography with rotating selfies Multi-view image acquisition system for face recognition Dynamic view generation for augmented reality OpenGL based SMMD Simulator